Federated Learning Research Activities

Introduction to Federated Learning (FL)

Federated Learning (FL) is a cutting-edge distributed machine learning paradigm that allows training models across multiple devices without requiring the sharing of raw data. Our research group has been deeply engaged in exploring FL techniques for several years, contributing significantly to this field with numerous scientific publications and two comprehensive surveys. Our expertise in FL ranges from addressing data heterogeneity challenges to optimizing model aggregation techniques.

Recently, we were awarded a nationally significant research project, PRIN 2022, titled “GANDALF.” This project focuses on advancing Federated Learning methodologies to enhance performance, privacy, and sustainability across various applications, particularly in environments with heterogeneous devices.

Research Activities

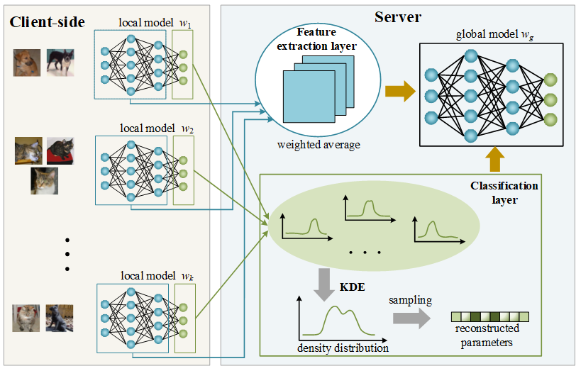

1. Non-IID Data Handling

Our research extensively investigates the complexities of handling non-independent and identically distributed (non-IID) data in FL environments. We have developed techniques to mitigate the effects of data heterogeneity, ensuring improved convergence and generalization in diverse use cases. This includes approaches leveraging Kernel Density Estimation (KDE) for better model aggregation in FL.

Figure: Visual representation of KDE applied to classification layers in FL.

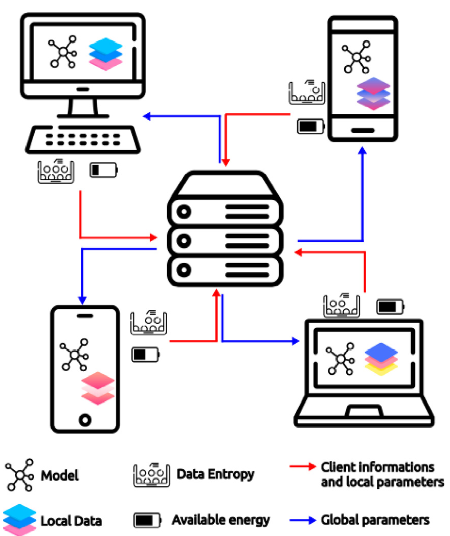

2. Energy-Efficient Federated Learning

In the field of Green Edge Cloud Computing (GECC), we developed ‘Eco-FL,’ a methodology that optimizes energy consumption in FL by selecting clients based on their energy reserves. Our approach enhances sustainability without compromising model accuracy, making FL more feasible for resource-constrained devices like IoT and mobile platforms.

Figure: Energy-efficient client selection mechanism in FL.

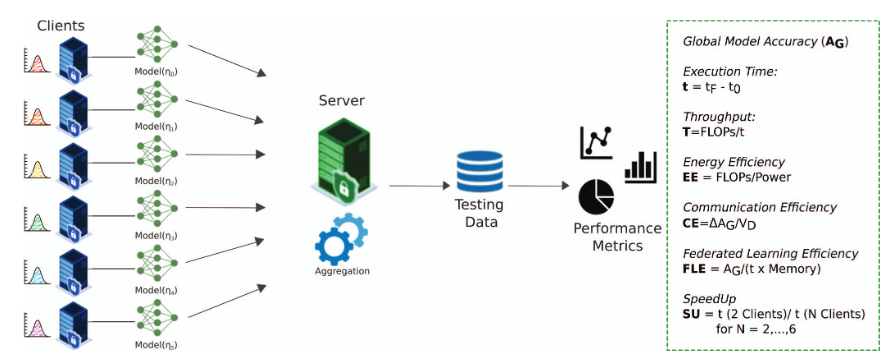

3. Federated Learning in High-Performance Computing (HPC) Environments

We have also explored the interplay between FL and HPC, focusing on optimizing federated training across heterogeneous HPC architectures. By integrating the Flower framework, we conducted simulations to analyze the effects of non-IID data in HPC environments, evaluating factors such as energy efficiency, communication performance, and model accuracy.

Figure: Federated Learning integration in HPC environments.

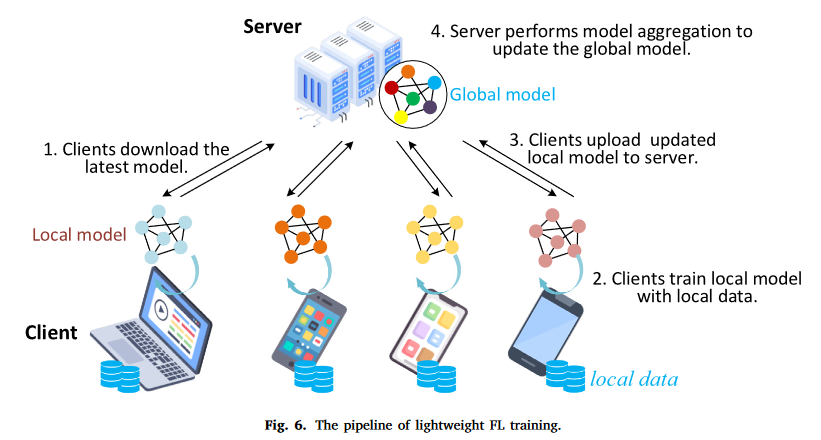

4. Lightweight Federated Learning (TinyFL)

In response to the challenges posed by device heterogeneity and limited resources, we have been working on lightweight FL, particularly for IoT and embedded devices. Our techniques, inspired by TinyML, focus on optimizing FL for environments with constrained computational resources, enabling the training and deployment of models in edge settings.

Figure: TinyFL framework for resource-constrained devices.

Conclusion

Our research in Federated Learning (FL) has made significant strides in addressing critical challenges such as data heterogeneity, energy efficiency, and scalability across diverse computing environments. The PRIN project “GANDALF” further strengthens our commitment to advancing FL methodologies for real-world applications. With ongoing efforts in lightweight FL and HPC integration, we continue to explore innovative approaches to enhance privacy, performance, and sustainability in distributed learning systems.